Resource-Efficient Multilingual Text-to-Image Learning

Content: coming soon...

Measuring Dataset Responsibility (Nature Machine Intelligence, 2024)

We conduct an audit through evaluation of the \textit{responsible rubric} calculated using the proposed framework. After surveying over 100 datasets, our detailed analysis of 60 distinct datasets highlights a universal susceptibility to fairness, privacy, and regulatory compliance issues. Our findings emphasize the urgent need for revising dataset creation methodologies within the scientific community, especially in light of global advancements in data protection legislation. We assert that our study is critically relevant in the contemporary AI context, offering insights and recommendations that are both timely and essential for the ongoing evolution of AI technologies.

For complete information, see https://iab-rubric.org/resources/codes/fpr.

This research proposes a novel approach for learning kernel Support Vector Machines (SVM) from large-scale data with reduced computation time. The proposed approach, termed as Subclass Reduced Set SVM (SRS-SVM), utilizes the subclass structure of data to effectively estimate the candidate support vector set. Since the candidate support vector set cardinality is only a fraction of the training set cardinality, learning SVM from the former requires less time without significantly changing the decision boundary. SRS-SVM depends on a domain knowledge related input parameter, i.e. number of subclasses. To reduce the domain knowledge dependency and to make the approach less sensitive to the subclass parameter, we extend the proposed SRS-SVM to create a robust and improved hierarchical model termed as the Hierarchical Subclass Reduced Set SVM (HSRS-SVM).

Since SRS-SVM and HSRS-SVM split non-linear optimization problem into multiple (smaller) linear optimization problems, both of them are amenable to parallelization. The effectiveness of the proposed approaches is evaluated on four synthetic and six real-world datasets. The performance is also compared with traditional solver (LibSVM) and state-of-the-art approaches such as divide-and-conquer SVM, FastFood, and LLSVM. The experimental results demonstrate that the proposed approach achieves similar classification accuracies while requiring fewer folds of reduced computation time as compared to existing solvers. We further demonstrate the suitability and improved performance of the proposed HSRS-SVM with deep learning features for face recognition using Labeled Faces in the Wild (LFW) dataset.

The code for the toolbox will soon be released.

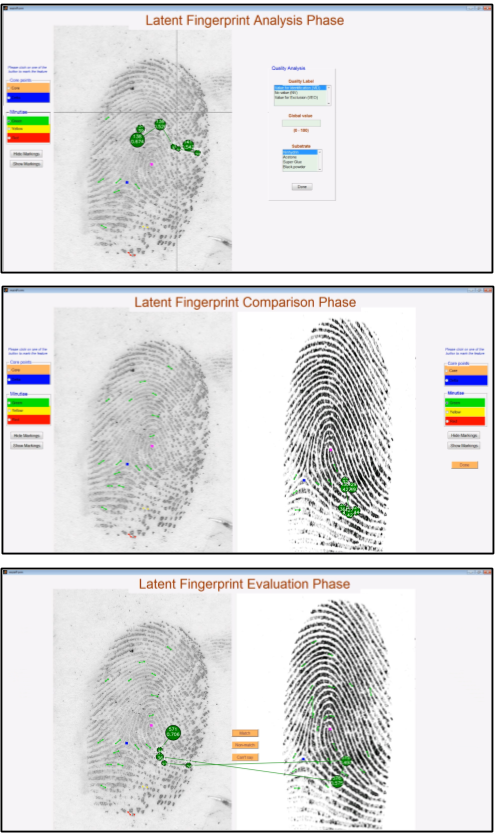

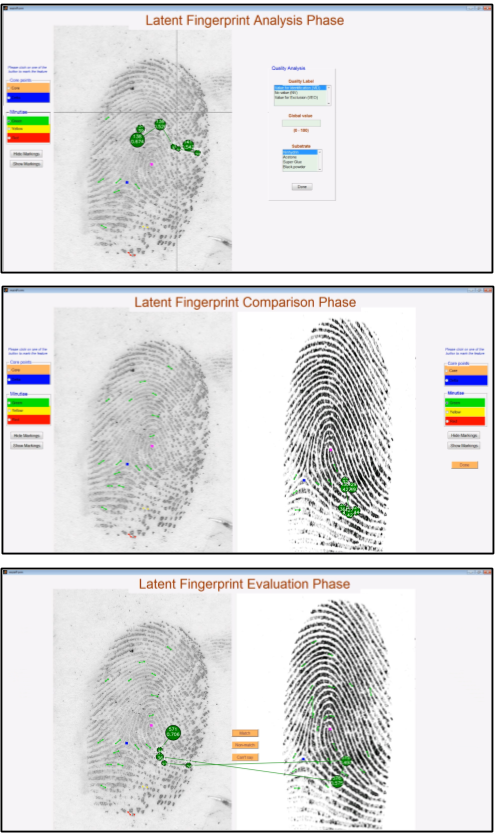

To assist the examiner and get their annotation for comparison, a latent-exemplar fingerprint pair is shown to each participant at a resolution of 1920 x 1080 pixels. Each fingerprint is displayed at a resolution of 700 x 960. The custom tool is built for the examiners to perform a comparison. Sample screenshots of the tool in each stage of the ACE mechanism is shown below. As a part of this research, the tool will be released for the research community.

The code for the toolbox will soon be released.

Deep learning models are widely used for various purposes such as face recognition and speech recognition. However, researchers have shown that these models are vulnerable to adversarial attacks. These attacks compute perturbations to generate images that decrease the performance of deep learning models. In this research, we have developed a toolbox, termed as SmartBox, for benchmarking the performance of adversarial attack detection and mitigation algorithms against face recognition. SmartBox is a python based toolbox which provides an open source implementation of adversarial detection and mitigation algorithms. In this research, Extended Yale Face Database B has been used for generating adversarial examples using various attack algorithms such as DeepFool, Gradient methods, Elastic-Net, and L2 attack. SmartBox provides a platform to evaluate newer attacks, detection models, and mitigation approaches on a common face recognition benchmark.

To assist the research community, the code of SmartBox is made available on the following link: